When Language Models Cherry-Pick References: A Subtle but Serious Threat to Critical Discourse

Blog post description.

6/6/20252 min read

Large Language Models (LLMs) are revolutionizing the way we interact with information. But as we race to integrate them into academic, policy, and professional workflows, we must pause and ask: What kind of reasoning are we reinforcing?

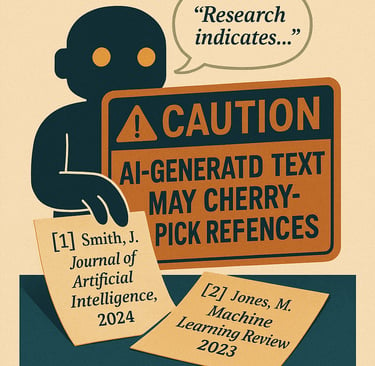

Much attention has been paid to LLMs "hallucinating" citations — inventing sources that don’t exist. But there's another issue, more insidious because it appears correct on the surface: the cherry-picking of citations to support a pre-defined argument.

Prompted to Persuade, Not to Explore

The typical prompt — “Generate a sentence with references to support my argument” — is inherently biased. It instructs the model not to investigate a question, but to validate a position. As a result, the model surfaces only the references and evidence that align with the given conclusion, while ignoring counterarguments, methodological critiques, or unresolved debates in the literature.

This practice, even when technically “correct,” undermines the purpose of research and deliberation. It replaces critical engagement with rhetorical convenience.

LLMs, unless explicitly designed otherwise, are tools of fluency — not scrutiny.

Cherry-Picking Isn't New — But It's Easier Than Ever

Cherry-picking is a well-known bias in academic and policy writing. But what makes it more dangerous in the LLM era is automation at scale:

A student can instantly generate multiple references that appear to back a weak thesis.

A consultant can draft a report that favors a client’s position, skipping inconvenient evidence.

A policymaker may be fed one-sided summaries that reinforce existing bias loops.

The danger is not fabrication — it is false completeness. Readers may assume that the LLM has “read everything” and synthesized a balanced view. In reality, it has only reinforced the narrow frame of the prompt.

What Should Responsible Use Look Like?

If we want to maintain the epistemic integrity of our institutions, we must change how we use these tools. Here are a few principles to consider:

Ask for tension, not consensus. Prompt models to show multiple sides of an issue. For example: “What are the competing perspectives on X, and what are their evidentiary bases?”

Use retrieval-based systems. When references matter, use retrieval-augmented generation (RAG) or LLMs fine-tuned with citation integrity layers.

Design prompts for critical review. Instead of “Support this argument,” try: “What assumptions underpin this claim, and what sources challenge it?”

Remain human-in-the-loop. Always treat LLM-generated outputs as a starting point, not a final conclusion.

What We Lose Without Disagreement

Critical thinking requires conflict. Research progresses through disagreement, revision, and debate. If LLMs are used primarily to confirm rather than confront, we risk building echo chambers with the gloss of academic polish.

The future of responsible AI is not just about making models more powerful — it is about how we shape our prompts, our expectations, and our epistemologies.

Arash Hajikhani, PhD

Showcasing my academic profile and projects and blogpost series

contact

Blogpost Subscription

© 2024. All rights reserved.